Your Unshared Photos Are Fueling Meta’s AI: A Privacy Deep Dive

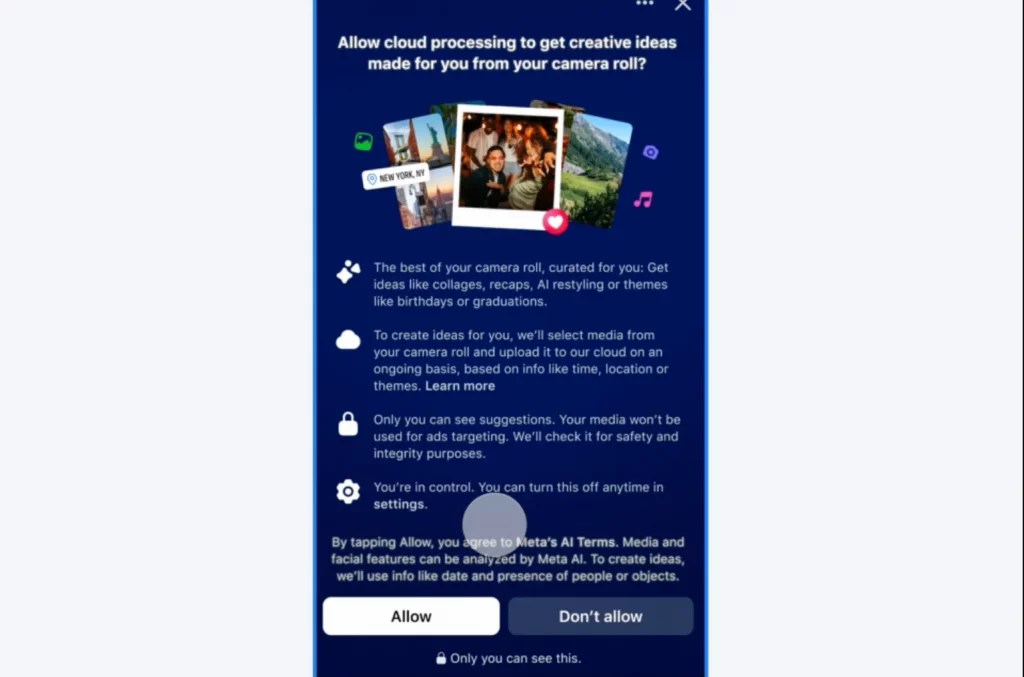

In a significant move that blurs the lines between convenience and digital privacy, Meta has introduced a new, optional AI feature for Facebook users in the US and Canada. This tool is designed to scan a user’s local camera roll—photos and videos not yet uploaded to the platform—to suggest which ones to share.

How the AI Scans Your Gallery

According to reports, once activated, this feature allows Meta’s AI to analyze the contents of a user’s smartphone camera gallery. It then uploads these unshared images to Meta’s cloud servers. The stated goal is to uncover what the company calls “hidden treasures”—photos lost among “screenshots, receipts, and random pictures.” The AI subsequently offers to create and share edited versions or collages of these personal images.

The Data Usage Policy: A Critical Nuance

While Meta had previously initiated tests of this feature and assured users that their private, unshared photos would not be used to train its AI models, it stopped short of ruling out this possibility for the future. The company’s latest announcement now clarifies its position with a critical condition.

A Meta spokesperson stated, “We do not use the files in your camera gallery to improve AI at Meta unless you choose to edit or share that media with our AI tools.” This means that the initial scan and upload for generating suggestions do not, in themselves, feed the AI’s training data. However, the moment a user acts on a suggestion by using an AI tool to edit the photo or by sharing it on Facebook, that data may then be used to “improve AI at Meta.”

This development marks a pivotal moment in the relationship between tech giants and user data, highlighting a new frontier where personal digital archives become a potential resource for corporate AI development. The onus is now on the user to understand the implications of interacting with these new AI-driven suggestions.